- 大状态与 Checkpoint 调优

- Overview

- Monitoring State and Checkpoints

- Tuning Checkpointing

- Tuning Network Buffers

- Asynchronous Checkpointing

- Tuning RocksDB

- Capacity Planning

- Compression

- Task-Local Recovery

- Motivation

- Approach

- Relationship of primary (distributed store) and secondary (task-local) state snapshots

- Configuring task-local recovery

- Details on task-local recovery for different state backends

- Allocation-preserving scheduling

大状态与 Checkpoint 调优

This page gives a guide how to configure and tune applications that use large state.

- Overview

- Monitoring State and Checkpoints

- Tuning Checkpointing

- Tuning Network Buffers

- Asynchronous Checkpointing

- Tuning RocksDB

- Capacity Planning

- Compression

- Task-Local Recovery

- Motivation

- Approach

- Relationship of primary (distributed store) and secondary (task-local) state snapshots

- Configuring task-local recovery

- Details on task-local recovery for different state backends

- Allocation-preserving scheduling

Overview

For Flink applications to run reliably at large scale, two conditions must be fulfilled:

The application needs to be able to take checkpoints reliably

The resources need to be sufficient catch up with the input data streams after a failure

The first sections discuss how to get well performing checkpoints at scale.The last section explains some best practices concerning planning how many resources to use.

Monitoring State and Checkpoints

The easiest way to monitor checkpoint behavior is via the UI’s checkpoint section. The documentationfor checkpoint monitoring shows how to access the available checkpointmetrics.

The two numbers that are of particular interest when scaling up checkpoints are:

- The time until operators start their checkpoint: This time is currently not exposed directly, but correspondsto:

checkpoint_start_delay = end_to_end_duration - synchronous_duration - asynchronous_duration

When the time to trigger the checkpoint is constantly very high, it means that the checkpoint barriers need a longtime to travel from the source to the operators. That typically indicates that the system is operating under aconstant backpressure.

- The amount of data buffered during alignments. For exactly-once semantics, Flink aligns the streams atoperators that receive multiple input streams, buffering some data for that alignment.The buffered data volume is ideally low - higher amounts means that checkpoint barriers are received atvery different times from the different input streams.

Note that when the here indicated numbers can be occasionally high in the presence of transient backpressure, data skew,or network issues. However, if the numbers are constantly very high, it means that Flink puts many resources into checkpointing.

Tuning Checkpointing

Checkpoints are triggered at regular intervals that applications can configure. When a checkpoint takes longerto complete than the checkpoint interval, the next checkpoint is not triggered before the in-progress checkpointcompletes. By default the next checkpoint will then be triggered immediately once the ongoing checkpoint completes.

When checkpoints end up frequently taking longer than the base interval (for example because stategrew larger than planned, or the storage where checkpoints are stored is temporarily slow),the system is constantly taking checkpoints (new ones are started immediately once ongoing once finish).That can mean that too many resources are constantly tied up in checkpointing and that the operators make toolittle progress. This behavior has less impact on streaming applications that use asynchronously checkpointed state,but may still have an impact on overall application performance.

To prevent such a situation, applications can define a minimum duration between checkpoints:

StreamExecutionEnvironment.getCheckpointConfig().setMinPauseBetweenCheckpoints(milliseconds)

This duration is the minimum time interval that must pass between the end of the latest checkpoint and the beginningof the next. The figure below illustrates how this impacts checkpointing.

Note: Applications can be configured (via the CheckpointConfig) to allow multiple checkpoints to be in progress atthe same time. For applications with large state in Flink, this often ties up too many resources into the checkpointing.When a savepoint is manually triggered, it may be in process concurrently with an ongoing checkpoint.

Tuning Network Buffers

Before Flink 1.3, an increased number of network buffers also caused increased checkpointing times sincekeeping more in-flight data meant that checkpoint barriers got delayed. Since Flink 1.3, thenumber of network buffers used per outgoing/incoming channel is limited and thus network buffersmay be configured without affecting checkpoint times(see network buffer configuration).

Asynchronous Checkpointing

When state is asynchronously snapshotted, the checkpoints scale better than when the state is synchronously snapshotted.Especially in more complex streaming applications with multiple joins, co-functions, or windows, this may have a profoundimpact.

For state to be snapshotted asynchronsously, you need to use a state backend which supports asynchronous snapshotting.Starting from Flink 1.3, both RocksDB-based as well as heap-based state backends (filesystem) support asynchronoussnapshotting and use it by default. This applies to to both managed operator state as well as managed keyed state (incl. timers state).

NoteThe combination RocksDB state backend with heap-based timers currently does NOT support asynchronous snapshots for the timers state.Other state like keyed state is still snapshotted asynchronously. Please note that this is not a regression from previous versions and will be resolved with FLINK-10026.

Tuning RocksDB

The state storage workhorse of many large scale Flink streaming applications is the RocksDB State Backend.The backend scales well beyond main memory and reliably stores large keyed state.

Unfortunately, RocksDB’s performance can vary with configuration, and there is little documentation on how to tuneRocksDB properly. For example, the default configuration is tailored towards SSDs and performs suboptimalon spinning disks.

Incremental Checkpoints

Incremental checkpoints can dramatically reduce the checkpointing time in comparison to full checkpoints, at the cost of a (potentially) longerrecovery time. The core idea is that incremental checkpoints only record all changes to the previous completed checkpoint, instead ofproducing a full, self-contained backup of the state backend. Like this, incremental checkpoints build upon previous checkpoints. Flink leveragesRocksDB’s internal backup mechanism in a way that is self-consolidating over time. As a result, the incremental checkpoint history in Flinkdoes not grow indefinitely, and old checkpoints are eventually subsumed and pruned automatically.

While we strongly encourage the use of incremental checkpoints for large state, please note that this is a new feature and currently not enabledby default. To enable this feature, users can instantiate a RocksDBStateBackend with the corresponding boolean flag in the constructor set to true, e.g.:

RocksDBStateBackend backend =new RocksDBStateBackend(filebackend, true);

RocksDB Timers

For RocksDB, a user can chose whether timers are stored on the heap (default) or inside RocksDB. Heap-based timers can have a better performance for smaller numbers oftimers, while storing timers inside RocksDB offers higher scalability as the number of timers in RocksDB can exceed the available main memory (spilling to disk).

When using RockDB as state backend, the type of timer storage can be selected through Flink’s configuration via option key state.backend.rocksdb.timer-service.factory.Possible choices are heap (to store timers on the heap, default) and rocksdb (to store timers in RocksDB).

NoteThe combination RocksDB state backend with heap-based timers currently does NOT support asynchronous snapshots for the timers state.Other state like keyed state is still snapshotted asynchronously. Please note that this is not a regression from previous versions and will be resolved with FLINK-10026.

Predefined Options

Flink provides some predefined collections of option for RocksDB for different settings, and there existed two waysto pass these predefined options to RocksDB:

- Configure the predefined options through

flink-conf.yamlvia option keystate.backend.rocksdb.predefined-options.The default value of this option isDEFAULTwhich meansPredefinedOptions.DEFAULT. - Set the predefined options programmatically, e.g.

RocksDBStateBackend.setPredefinedOptions(PredefinedOptions.SPINNING_DISK_OPTIMIZED_HIGH_MEM).

We expect to accumulate more such profiles over time. Feel free to contribute such predefined option profiles when youfound a set of options that work well and seem representative for certain workloads.

Note Predefined options which set programmatically would override the one configured via flink-conf.yaml.

Passing Options Factory to RocksDB

There existed two ways to pass options factory to RocksDB in Flink:

- Configure options factory through

flink-conf.yaml. You could set the options factory class name via option keystate.backend.rocksdb.options-factory.The default value for this option isorg.apache.flink.contrib.streaming.state.DefaultConfigurableOptionsFactory, and all candidate configurable options are defined inRocksDBConfigurableOptions.Moreover, you could also define your customized and configurable options factory class like below and pass the class name tostate.backend.rocksdb.options-factory.

public class MyOptionsFactory implements ConfigurableOptionsFactory {private static final long DEFAULT_SIZE = 256 * 1024 * 1024; // 256 MBprivate long blockCacheSize = DEFAULT_SIZE;@Overridepublic DBOptions createDBOptions(DBOptions currentOptions) {return currentOptions.setIncreaseParallelism(4).setUseFsync(false);}@Overridepublic ColumnFamilyOptions createColumnOptions(ColumnFamilyOptions currentOptions) {return currentOptions.setTableFormatConfig(new BlockBasedTableConfig().setBlockCacheSize(blockCacheSize).setBlockSize(128 * 1024)); // 128 KB}@Overridepublic OptionsFactory configure(Configuration configuration) {this.blockCacheSize =configuration.getLong("my.custom.rocksdb.block.cache.size", DEFAULT_SIZE);return this;}}

- Set the options factory programmatically, e.g.

RocksDBStateBackend.setOptions(new MyOptionsFactory());

Note Options factory which set programmatically would override the one configured via flink-conf.yaml,and options factory has a higher priority over the predefined options if ever configured or set.

Note RocksDB is a native library that allocates memory directly from the process,and not from the JVM. Any memory you assign to RocksDB will have to be accounted for, typically by decreasing the JVM heap sizeof the TaskManagers by the same amount. Not doing that may result in YARN/Mesos/etc terminating the JVM processes forallocating more memory than configured.

Capacity Planning

This section discusses how to decide how many resources should be used for a Flink job to run reliably.The basic rules of thumb for capacity planning are:

Normal operation should have enough capacity to not operate under constant back pressure.See back pressure monitoring for details on how to check whether the application runs under back pressure.

Provision some extra resources on top of the resources needed to run the program back-pressure-free during failure-free time.These resources are needed to “catch up” with the input data that accumulated during the time the applicationwas recovering.How much that should be depends on how long recovery operations usually take (which depends on the size of the statethat needs to be loaded into the new TaskManagers on a failover) and how fast the scenario requires failures to recover.

Important: The base line should to be established with checkpointing activated, because checkpointing ties upsome amount of resources (such as network bandwidth).

Temporary back pressure is usually okay, and an essential part of execution flow control during load spikes,during catch-up phases, or when external systems (that are written to in a sink) exhibit temporary slowdown.

Certain operations (like large windows) result in a spiky load for their downstream operators:In the case of windows, the downstream operators may have little to do while the window is being built,and have a load to do when the windows are emitted.The planning for the downstream parallelism needs to take into account how much the windows emit and howfast such a spike needs to be processed.

Important: In order to allow for adding resources later, make sure to set the maximum parallelism of thedata stream program to a reasonable number. The maximum parallelism defines how high you can set the programsparallelism when re-scaling the program (via a savepoint).

Flink’s internal bookkeeping tracks parallel state in the granularity of max-parallelism-many key groups.Flink’s design strives to make it efficient to have a very high value for the maximum parallelism, even ifexecuting the program with a low parallelism.

Compression

Flink offers optional compression (default: off) for all checkpoints and savepoints. Currently, compression always usesthe snappy compression algorithm (version 1.1.4) but we are planning to supportcustom compression algorithms in the future. Compression works on the granularity of key-groups in keyed state, i.e.each key-group can be decompressed individually, which is important for rescaling.

Compression can be activated through the ExecutionConfig:

ExecutionConfig executionConfig = new ExecutionConfig();executionConfig.setUseSnapshotCompression(true);

Note The compression option has no impact on incremental snapshots, because they are using RocksDB’s internalformat which is always using snappy compression out of the box.

Task-Local Recovery

Motivation

In Flink’s checkpointing, each task produces a snapshot of its state that is then written to a distributed store. Each task acknowledgesa successful write of the state to the job manager by sending a handle that describes the location of the state in the distributed store.The job manager, in turn, collects the handles from all tasks and bundles them into a checkpoint object.

In case of recovery, the job manager opens the latest checkpoint object and sends the handles back to the corresponding tasks, which canthen restore their state from the distributed storage. Using a distributed storage to store state has two important advantages. First, the storageis fault tolerant and second, all state in the distributed store is accessible to all nodes and can be easily redistributed (e.g. for rescaling).

However, using a remote distributed store has also one big disadvantage: all tasks must read their state from a remote location, over the network.In many scenarios, recovery could reschedule failed tasks to the same task manager as in the previous run (of course there are exceptions like machinefailures), but we still have to read remote state. This can result in long recovery time for large states, even if there was only a small failure ona single machine.

Approach

Task-local state recovery targets exactly this problem of long recovery time and the main idea is the following: for every checkpoint, each taskdoes not only write task states to the distributed storage, but also keep a secondary copy of the state snapshot in a storage that is local tothe task (e.g. on local disk or in memory). Notice that the primary store for snapshots must still be the distributed store, because local storagedoes not ensure durability under node failures and also does not provide access for other nodes to redistribute state, this functionality stillrequires the primary copy.

However, for each task that can be rescheduled to the previous location for recovery, we can restore state from the secondary, localcopy and avoid the costs of reading the state remotely. Given that many failures are not node failures and node failures typically only affect oneor very few nodes at a time, it is very likely that in a recovery most tasks can return to their previous location and find their local state intact.This is what makes local recovery effective in reducing recovery time.

Please note that this can come at some additional costs per checkpoint for creating and storing the secondary local state copy, depending on thechosen state backend and checkpointing strategy. For example, in most cases the implementation will simply duplicate the writes to the distributedstore to a local file.

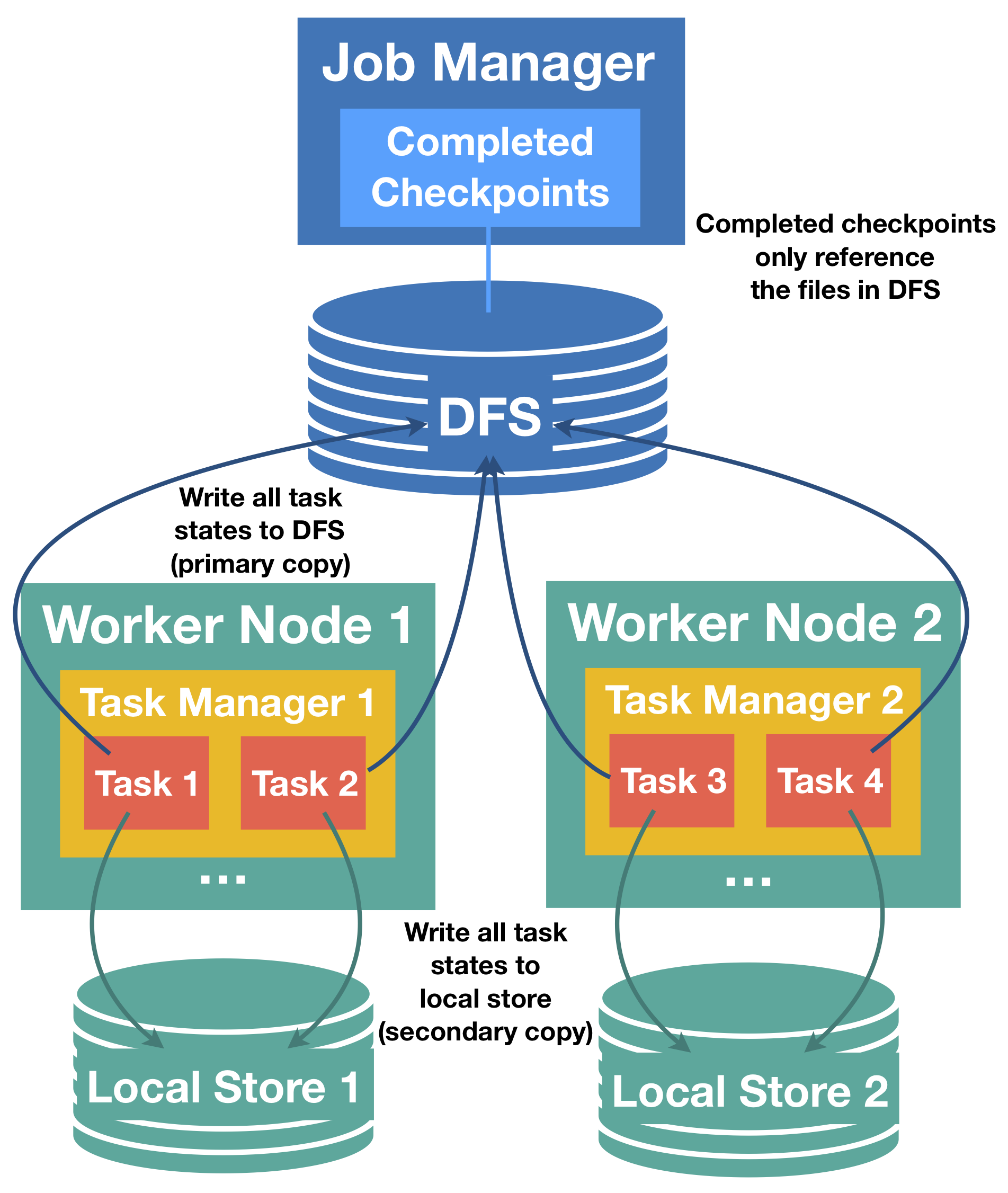

Relationship of primary (distributed store) and secondary (task-local) state snapshots

Task-local state is always considered a secondary copy, the ground truth of the checkpoint state is the primary copy in the distributed store. Thishas implications for problems with local state during checkpointing and recovery:

For checkpointing, the primary copy must be successful and a failure to produce the secondary, local copy will not fail the checkpoint. A checkpointwill fail if the primary copy could not be created, even if the secondary copy was successfully created.

Only the primary copy is acknowledged and managed by the job manager, secondary copies are owned by task managers and their life cycles can beindependent from their primary copies. For example, it is possible to retain a history of the 3 latest checkpoints as primary copies and only keepthe task-local state of the latest checkpoint.

For recovery, Flink will always attempt to restore from task-local state first, if a matching secondary copy is available. If any problem occurs duringthe recovery from the secondary copy, Flink will transparently retry to recover the task from the primary copy. Recovery only fails, if primaryand the (optional) secondary copy failed. In this case, depending on the configuration Flink could still fall back to an older checkpoint.

It is possible that the task-local copy contains only parts of the full task state (e.g. exception while writing one local file). In this case,Flink will first try to recover local parts locally, non-local state is restored from the primary copy. Primary state must always be complete and isa superset of the task-local state.

Task-local state can have a different format than the primary state, they are not required to be byte identical. For example, it could be even possiblethat the task-local state is an in-memory consisting of heap objects, and not stored in any files.

If a task manager is lost, the local state from all its task is lost.

Configuring task-local recovery

Task-local recovery is deactivated by default and can be activated through Flink’s configuration with the key state.backend.local-recovery as specifiedin CheckpointingOptions.LOCALRECOVERY. The value for this setting can either be _true to enable or false (default) to disable local recovery.

Details on task-local recovery for different state backends

Limitation: Currently, task-local recovery only covers keyed state backends. Keyed state is typically by far the largest part of the state. In the near future, we willalso cover operator state and timers.

The following state backends can support task-local recovery.

FsStateBackend: task-local recovery is supported for keyed state. The implementation will duplicate the state to a local file. This can introduce additional write costsand occupy local disk space. In the future, we might also offer an implementation that keeps task-local state in memory.

RocksDBStateBackend: task-local recovery is supported for keyed state. For full checkpoints, state is duplicated to a local file. This can introduce additional write costsand occupy local disk space. For incremental snapshots, the local state is based on RocksDB’s native checkpointing mechanism. This mechanism is also used as the first stepto create the primary copy, which means that in this case no additional cost is introduced for creating the secondary copy. We simply keep the native checkpoint directory aroundinstead of deleting it after uploading to the distributed store. This local copy can share active files with the working directory of RocksDB (via hard links), so for activefiles also no additional disk space is consumed for task-local recovery with incremental snapshots. Using hard links also means that the RocksDB directories must be onthe same physical device as all the configure local recovery directories that can be used to store local state, or else establishing hard links can fail (see FLINK-10954).Currently, this also prevents using local recovery when RocksDB directories are configured to be located on more than one physical device.

Allocation-preserving scheduling

Task-local recovery assumes allocation-preserving task scheduling under failures, which works as follows. Each task remembers its previousallocation and requests the exact same slot to restart in recovery. If this slot is not available, the task will request a new, fresh slot from the resource manager. This way,if a task manager is no longer available, a task that cannot return to its previous location will not drive other recovering tasks out of their previous slots. Our reasoning isthat the previous slot can only disappear when a task manager is no longer available, and in this case some tasks have to request a new slot anyways. With our scheduling strategywe give the maximum number of tasks a chance to recover from their local state and avoid the cascading effect of tasks stealing their previous slots from one another.